Why Europe Lost Semiconductors?

Could it be that their system simply isn’t compatible with semiconductors?

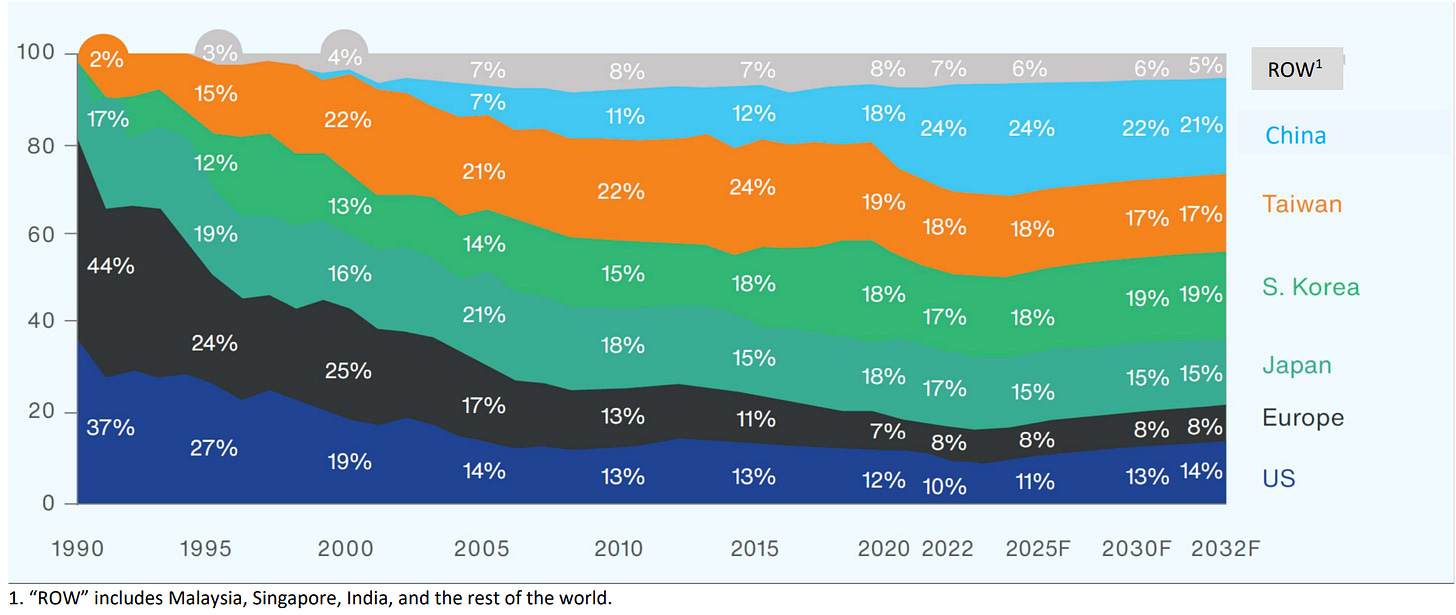

Today, Europe accounts for less than 10% of the global semiconductor market.

Considering that it once produced nearly half of the world’s semiconductors, it’s no exaggeration to say that Europe has fallen from grace in this industry.

Today, instead of writing another piece focused on technology or market analysis, I’d like to take a step back — to look into the past in order to understand the future.

This article examines “Why Europe Lost Semiconductors” from three perspectives:

① The absence of market mechanisms

② The limits of bureaucratic industrial policy

③ The structural gap between Europe and the U.S./Asia in ecosystem dynamics.

“Europe was once the cradle of semiconductors.”

You might not know this, but Europe was once not just a leader — it was a creator in the semiconductor industry.

Right after Bell Labs invented the transistor in 1948, research institutes in France and Germany independently developed their own versions around the same time.

In 1948, just a few months after Bell Labs invented the first transistor in 1947, two scientists in Paris — Herbert Mataré and Heinrich Welker — independently developed a similar device called the “transistron.”

Their invention was well received by the French press, and in 1949 the French government held a major press conference to announce it to the public.

By the end of 1949, Westinghouse’s French subsidiary had even built a factory capable of mass-producing the transistron.

The First Miss: However, after witnessing the atomic bombing of Hiroshima, the French government decided to focus its resources on developing nuclear technology, cutting off state funding for the communications industry and leaving it to the private sector.

• Without government support, research on the transistron gradually faded away, and the inventors eventually departed — Welker joined Siemens, while Mataré emigrated to the United States.

Time then moved into the 1950s.

The 1950s: The Loss of Technological Leadership

France struggled with the unpredictability of early semiconductor manufacturing, which led to a more conservative approach — favoring abstract, theoretical research over practical development.

The “unpredictability” here refers to the fact that semiconductor manufacturing itself was inherently difficult to control, chaotic, and required a complex mix of interdisciplinary knowledge.

At the time, early semiconductor production was extremely inefficient — the precise techniques and know-how needed to mass-produce devices like transistors reliably had not yet been established.

While Bell Labs in the United States addressed this by openly sharing its production knowledge, dispersing expertise, and leveraging collective intelligence, France approached the problem in a closed, fragmented manner within separate academic circles — and failed.

As a result, France lost its early-mover advantage and, by the late 1950s, had fallen behind even its peers in Japan and across continental Europe.

And now, as the needle of time moves into the 1960s, the integrated circuit emerges.

In the 1960s, the U.S. semiconductor industry was driven primarily by military demand—notably from the Minuteman II ICBM and the Apollo Program—with the Department of Defense purchasing nearly half of all chips produced.

Military applications demanded smaller, more power-efficient, and highly reliable devices, effectively forcing American companies to refine their technologies at an accelerated pace.

In contrast, Europe’s semiconductor demand came mainly from the consumer sector, not the military or government. In 1963, consumer demand accounted for 56% in West Germany and 35% in France, while in the U.S., it was only 5%.

Consumer markets prioritized price over performance.

European industrial giants like Philips and Siemens viewed semiconductors merely as components within finished products. They focused on incremental improvements in low-cost germanium transistor technology, believing that silicon was suitable only for high-temperature applications.

Meanwhile, American startups rapidly mass-produced silicon planar transistors, embracing disruptive innovation and widening the technological gap.

By this time, Europe was no longer just trying to catch up with the United States—it now had to defend itself from Japan, an emerging competitor.

Japan, through its consumer electronics industry, swiftly adopted transistor technology and caught up with Europe by implementing import restrictions and signing over 250 technology licensing agreements, which helped build its domestic manufacturing capacity.

France, for its part, launched the “Plan Calcul” computer development program in 1966, prioritizing the rapid creation of a national computer system — even at the expense of its domestic semiconductor industry.

In short, France—and much of Europe—was indifferent to nurturing its own semiconductor ecosystem.

In the end, European chip manufacturers failed to achieve the necessary production scale to keep up with the aggressive cost-reduction pace of their foreign competitors.

Looking back now, the 1960s can be seen as Europe’s last real opportunity to take leadership in the semiconductor industry.

However, that opportunity was lost — and the gap would only grow, permanently.

1970s: The Rise of LSI Complexity and the Widening Gap

With the introduction of Intel’s 4004 microprocessor in 1971, the era of Large-Scale Integration (LSI) began — a period marked by a dramatic increase in circuit complexity.

In this new age, enormous capital investment was required for any new entrant to succeed.

In other words, what was once a craft-like, workshop-scale industry was now transforming into one defined by advanced equipment, sophisticated materials, and highly specialized talent — an industry with a genuine economic moat.

• In the United States, computers became the primary demand driver for high-performance LSI devices, distinct from missiles or televisions.

• Europe, however, lacked not only the production capacity to manufacture such advanced LSI chips but also the strong demand base necessary to justify their development.

European computer firms were much smaller than their American counterparts, military and avionics demand was weak, and the telecommunications market was deeply fragmented.

In other words, Europe was not a single unified market, but rather a collection of divided national markets, each with its own standards and regulations.

Also, European consumer electronics companies still wanted a different kind of chip — not the cutting-edge, high-performance ones.

During this period, the United States was dominant — yet there was one semiconductor powerhouse determined to catch up: Japan.

In 1976, Japan launched the VLSI (Very Large Scale Integration) Project to counter IBM, investing hundreds of millions of dollars into a joint effort to develop next-generation technologies.

Although the project ultimately failed to achieve its goal of creating a “one-chip computer,” it played a crucial role in strengthening Japan’s semiconductor manufacturing industry — refining its technical capabilities and leading its companies to adopt CMOS technology, which would later become the global standard.

1980s: The Failure of National R&D Subsidies and Cooperation

In the 1980s, Europe’s information technology (IT) trade deficit surged dramatically — from $4.1 billion in 1980 to nearly $7 billion by 1985.

European companies tended to prefer technological alliances with U.S. firms rather than collaborating within Europe itself.

(For example, Philips partnered with AMD and TI, while Siemens allied with Intel, Toshiba, and Fujitsu.)

Alarmed by the growing IT trade deficit, European governments began launching state-led projects and subsidy programs.

France announced a five-year microelectronics plan, while West Germany and the Netherlands jointly launched the “Mega-Projekt” to support Siemens and Philips — described at the time as “Europe’s last chance to fight back against the Japanese chip invasion.”

Although Siemens successfully demonstrated a 4-megabit chip, it had to license 1-megabit DRAM technology from Toshiba, and by then Japan’s NTT had already unveiled a 16-megabit DRAM, showing just how rapidly technology was advancing.

The Mega-Projekt later evolved in 1989 into JESSI (Joint European Submicron Silicon Initiative), but it suffered from major setbacks — Philips withdrew from the memory business, and Siemens abandoned its 16-megabit memory development plans after partnering with IBM.

As a result, the program’s budget was sharply reduced, marking yet another missed opportunity for Europe’s semiconductor ambitions.

1990s: The Shift of Semiconductor Hegemony to Asia, Europe Reduced to Specialized Niche Markets

In the 1990s, the technological and financial barriers to entering the semiconductor industry became extremely high — a 250nm memory fab cost between $1 billion and $2 billion.

During this period, the companies that still dominate the global semiconductor industry today emerged — TSMC, Samsung, SK Hynix, and others.

As a result, Europe faced intensifying competition from Asia’s electronics supply chains, led by TSMC’s pioneering independent foundry model and conglomerate semiconductor giants like Samsung.

Europe lost the consumer electronics market to Asia, military and government spending declined, and the computing market remained firmly under the control of U.S. companies.

While Europe has maintained a strong presence in its remaining domains — such as industrial, telecommunications, and automotive sectors — these now account for only about 10% of the global market.

Conclusion: Europe Had Reasons to Lose Semiconductors

Europe was once the birthplace of the semiconductor, but it failed to commercialize its invention.

When government support was needed, it withdrew.

And when private-sector demand was finally needed to drive the industry forward, governments stepped in too late — trying to lead from the top down.

In short, policy timing was completely out of sync.

Europe spent decades in this cycle of hesitation and misalignment.

And now, when it declares it will double its semiconductor market share, one can’t help but ask — is that even possible?

Europe lacks both the political will of the United States to pressure Taiwan and the financial capacity of China to pour massive subsidies into its industry.

It remains indecisive, forever chasing ideals instead of execution.

I would argue that Europe’s relative economic decline today ultimately stems from losing semiconductors.

Because Europe lost semiconductors, it missed the era of the personal computer.

Because it missed the PC era, it lost the software revolution that created Microsoft.

Because it lost the software era, it never gave birth to Big Tech.

And because it never gave birth to Big Tech, its economic growth stagnated.

AMD founder Jerry Sanders once said, “Real men have fabs.”

I’d like to give it a slight twist:

“Real countries have fabs.”