Why did I turn bullish on NAND? feat. HBF

SemiconSam Original Report

Recently, the stock price of SanDisk, spun off from WDC, has surged.

Similarly, Japan’s Kioxia Holdings, a Toshiba spin-off, has also posted a sharp rise.

The key question is: what explains this surge?

There is a widely cited saying in economics: ‘The market moves faster than anything else.’

I interpret this as a signal that the market has begun to focus on NAND.

Let’s look back for a moment.

During the worst NAND downturn of 2023–2024, everyone ignored NAND.

Unlike DRAM, there were simply too many players in the market, making supply cuts difficult to implement in a flexible way. On top of that, compared to DRAM, China was able to expand capacity more easily, as the technological hurdles were lower.

Even the AI boom that began in late 2022 didn’t bring much benefit. Data center operators, including CSPs, still preferred the cheaper HDDs when building out their infrastructure.

However, I have now begun to think that demand for NAND has grown so strong that the market may soon tip into a supply shortage.

While HDDs are still cheaper today, their mechanical structure makes them slower, less efficient, and more prone to failure.

SSDs, on the other hand, are far more power-efficient than HDDs, reducing energy costs, cooling requirements, and physical space — all of which are critical factors as data storage demand continues to expand.

Currently, there are two types of SSDs attracting attention in the AI field.

One is high-capacity eSSD, and the other is the still-in-development NL SSD.

Let’s start with eSSD. It refers to large-capacity SSDs, mainly used in inference workloads. However, at present, the price is so high that even CSPs are hesitant to adopt them.

The other is NL SSD (Nearline SSD).

Based on QLC (Quad-Level Cell) flash, Nearline (NL) SSD storage offers higher storage density compared to TLC (Triple-Level Cell) flash, while also being lower cost.

This makes QLC flash a realistic option for data centers to replace HDD capacity.

The target of NL SSD is to replace NL HDD, not to substitute the high-performance QLC eSSD used in AI training and inference.

In the current situation of severe HDD shortages, with lead times exceeding one year, CSPs have expressed a willingness to adopt NL SSD in large volumes — as long as the pricing is reasonable.

These factors could certainly cause a supply shortage in the NAND market, but the real reason I’m bullish on NAND in the mid to long term is High Bandwidth Flash (HBF).

What is HBF?

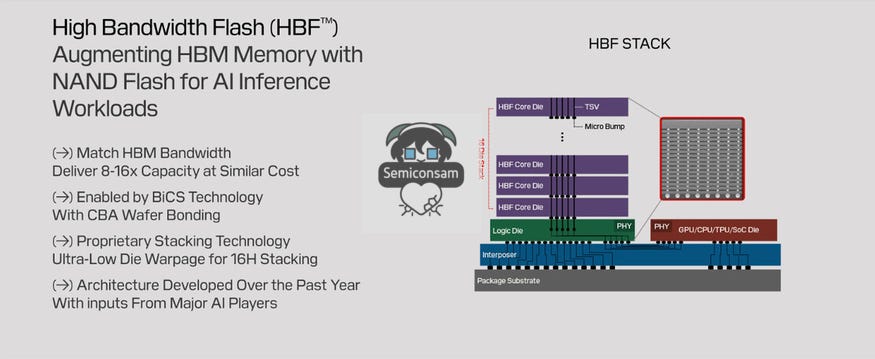

In February 2025, SanDisk announced a new product called HBF (High-Bandwidth Flash) at its Investor Day.

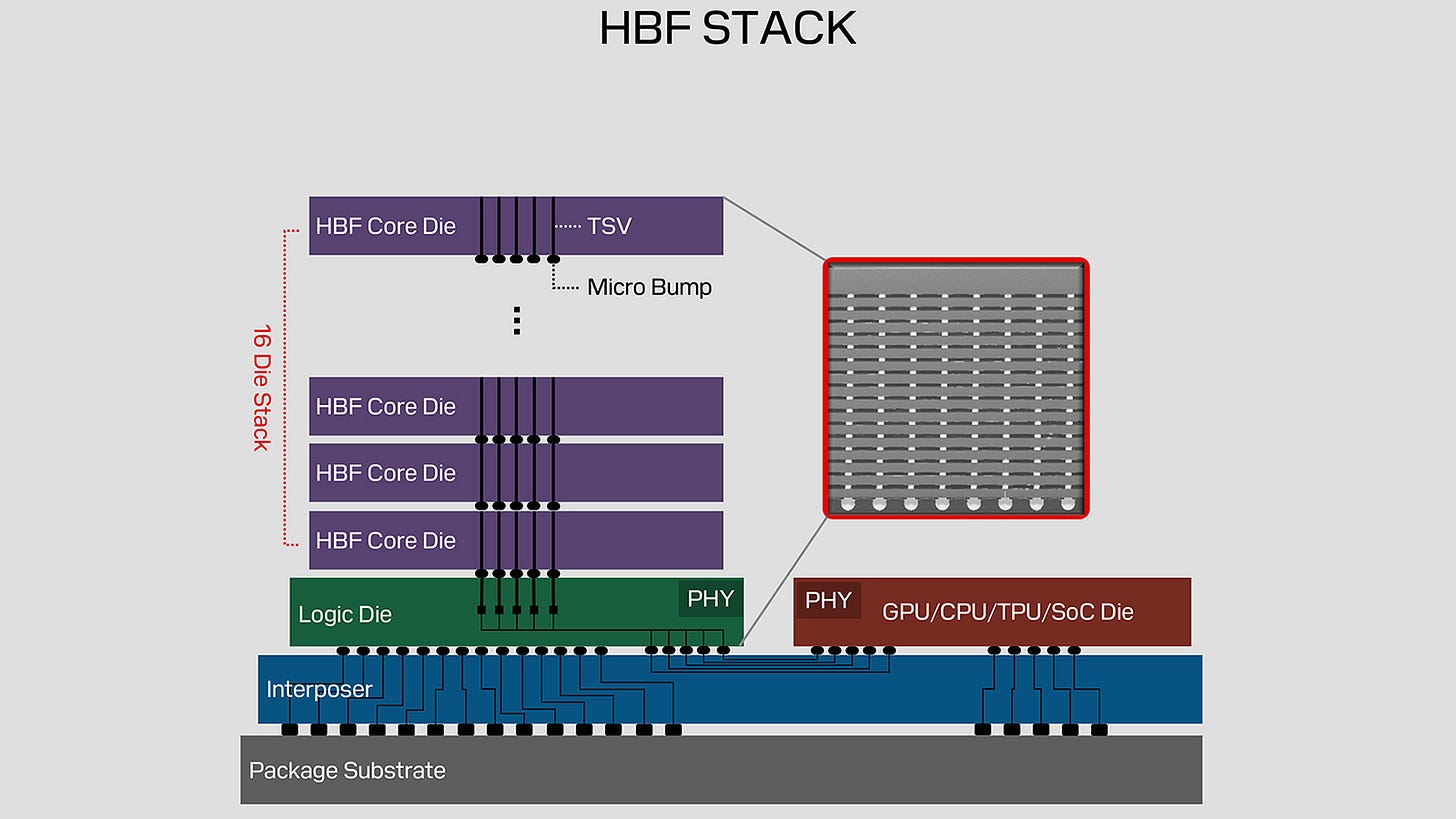

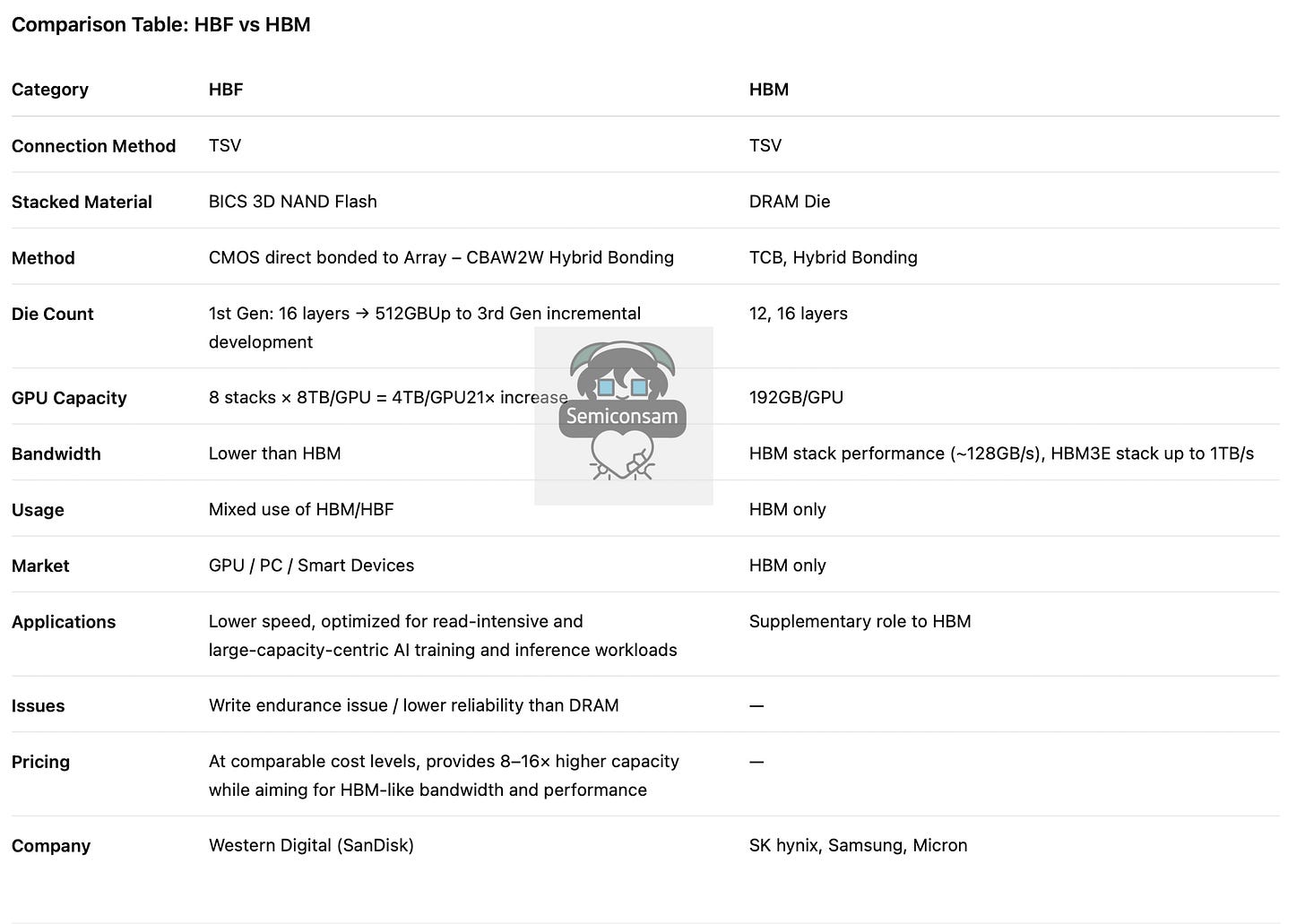

HBF is a new type of memory built by vertically stacking NAND flash. If HBM (High-Bandwidth Memory) is created by stacking multiple DRAM chips, HBF is made by stacking layers of NAND flash. The biggest bottleneck in today’s AI semiconductors occurs when data moves from memory semiconductors to logic semiconductors.

Conceptually, HBF technology is designed to allow GPUs to quickly access large amounts of NAND capacity to supplement the limited capacity of HBM.

This reduces the time spent fetching data from PCIe-connected SSDs, thereby accelerating AI training and inference workloads.

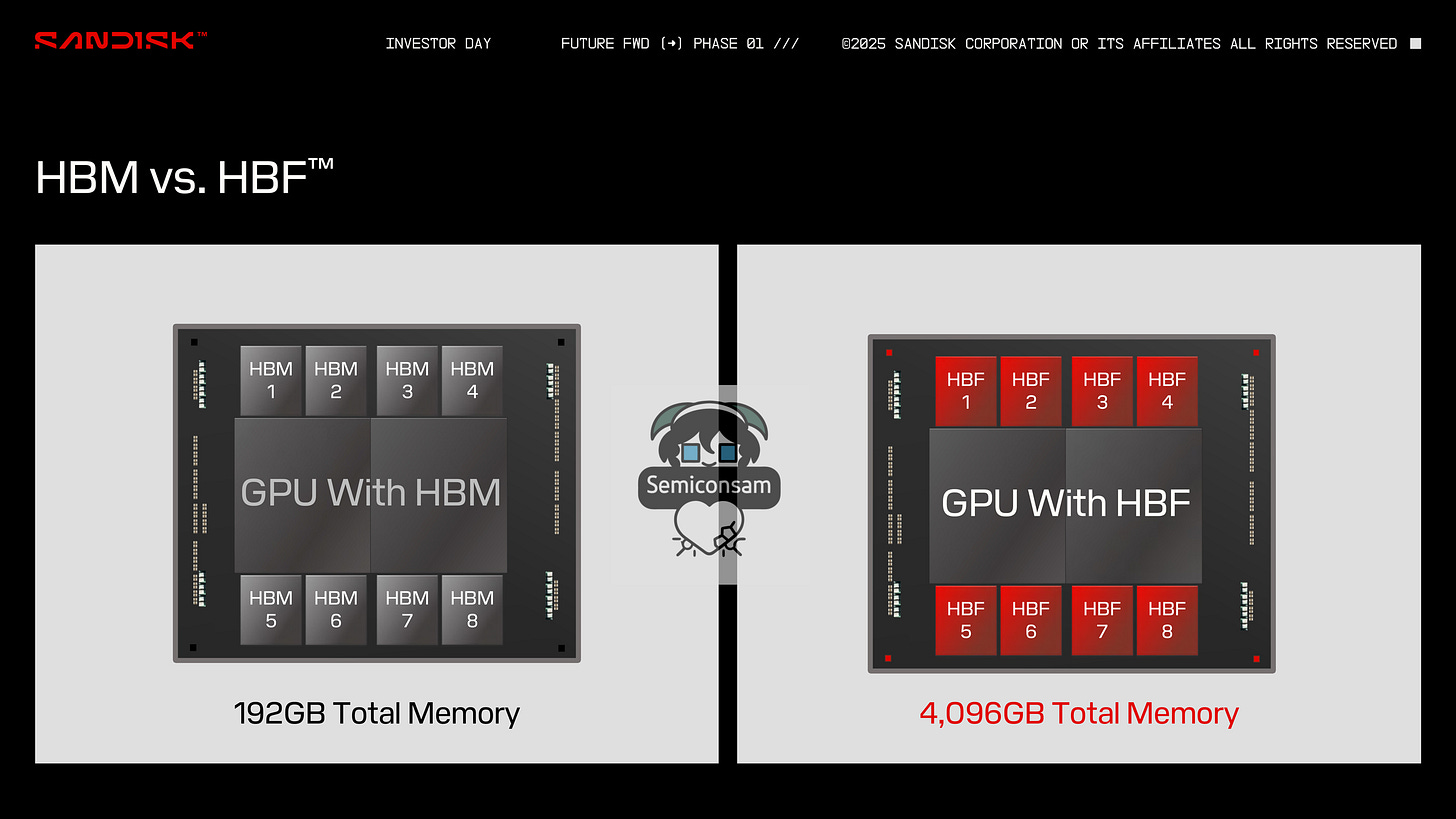

HBF access speed could be tens to hundreds of times faster than SSDs, and the first generation of HBF is expected to provide 8–16x the capacity of HBM while maintaining a similar bandwidth.

So then, does HBF replace HBM?

I don’t think so. Rather, I expect it will be used in a complementary way alongside HBM.

KAIST Professor Jung-Ho Kim, often referred to as the “father of HBM,” stated:

“Samsung Electronics and SK Hynix’s earnings are currently determined by HBM,” but added, “Ten years from now, HBF will take its place.”

The reason, according to the professor, is that improvements in artificial intelligence (AI) performance depend on both memory bandwidth and capacity. Professor Kim explained, “Today’s AI is based on the transformer deep learning architecture. To process models with as many as one million input tokens, terabyte (TB)-scale data is required.” He added, “When reading and writing such TB-scale data thousands of times per second, if memory bandwidth is insufficient, bottlenecks occur.”

If bottlenecks appear, the response speed of large language model (LLM)-based generative AI services such as OpenAI’s ChatGPT or Google’s Gemini slows down. The bottleneck originates from the current fundamental structure of computers, the von Neumann architecture, in which the CPU or GPU and memory are physically separated, making data transfer speed (bandwidth) between them critical. Professor Kim stressed, “Even if you double the size of the GPU, it is useless without sufficient memory bandwidth. AI performance is memory-bound and ultimately constrained by memory performance.”

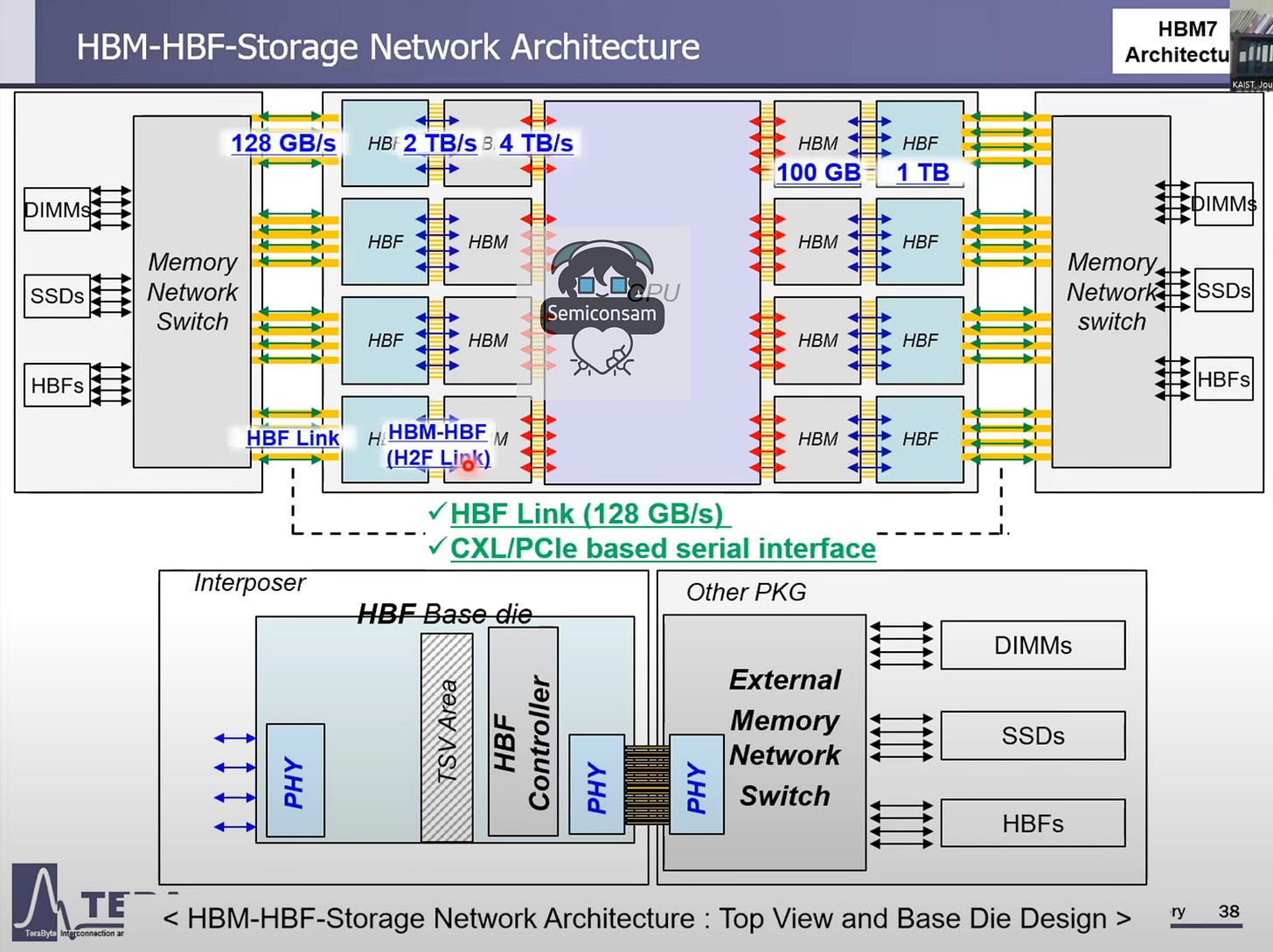

What Professor Kim argues is that HBF can take the place of LPDDR on top of existing HBM-based dies. In this way, HBF compensates for HBM’s limited capacity by serving as storage for large-scale AI models directly within the GPU. HBM acts as a cache that temporarily processes data at high speed, while HBF functions as the memory that holds the massive AI models themselves.

In the latter, paywalled section, I will analyze the companies that are expected to benefit from this.

![HBF, HBM, DDR5의 장단점. HBF는 HBM과 유사한 대역폭을 유지하면서도 커패시티를 갖는다. 다만 레이턴시가 길다는 등의 단점이 있어 HBM과 혼용될 것으로 전망된다. [출처=진운용 기자] HBF, HBM, DDR5의 장단점. HBF는 HBM과 유사한 대역폭을 유지하면서도 커패시티를 갖는다. 다만 레이턴시가 길다는 등의 단점이 있어 HBM과 혼용될 것으로 전망된다. [출처=진운용 기자]](https://substackcdn.com/image/fetch/$s_!qtMz!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Ffd06f845-c397-4423-87d1-0fd97dce1f14_600x203.png)