Why Should We Pay Attention to Broadcom?

There’s No Such Thing as a Baseless Stock Surge.

To Begin: The Argument for AI Performance Hinged on Memory

I assert, with confidence, that the enhancement of AI performance fundamentally depends on memory.

The success of large language models (LLMs) is not merely tied to the computational power of GPUs but is grounded in the speed of data processing and the energy efficiency provided by memory. Memory has become the cornerstone of AI semiconductor design.

Let’s start with a simple example.

When generating a single token in an LLM, the entire model must be referenced. Assuming a model size of 100GB, a straightforward calculation shows that the memory bandwidth required to generate one token is 100GB/0.1s = 1TB/s. However, additional factors such as cache access and inefficiencies in the system must be considered. Consequently, the required bandwidth is likely more than twice this figure—at least 2TB/s.

The Demands of LLMs and the Importance of Memory Performance

This simple calculation demonstrates how critical memory is. As AI models grow larger and more complex, memory performance becomes the most significant factor in determining the speed and efficiency of LLM inference.

1. Maintaining Token Generation Times

As model sizes increase, faster and more powerful memory becomes essential to generate tokens within the same timeframe (e.g., 100ms).

• When models grow from 100GB to several hundred gigabytes or even terabytes, existing memory technologies are insufficient to meet these demands.

2. Increasing Complexity of Inference Tasks

It’s not just about growing model sizes.

• Sequence length increases, requiring more frequent and expansive data access.

• Reasoning tokens and other high-level computations introduce new dimensions of complexity, pushing memory bandwidth to its limits and leading to performance bottlenecks.

Ultimately, maintaining and improving inference performance in LLMs demands ultra-high-speed memory technology.

HBM: The Optimal Solution for LLMs

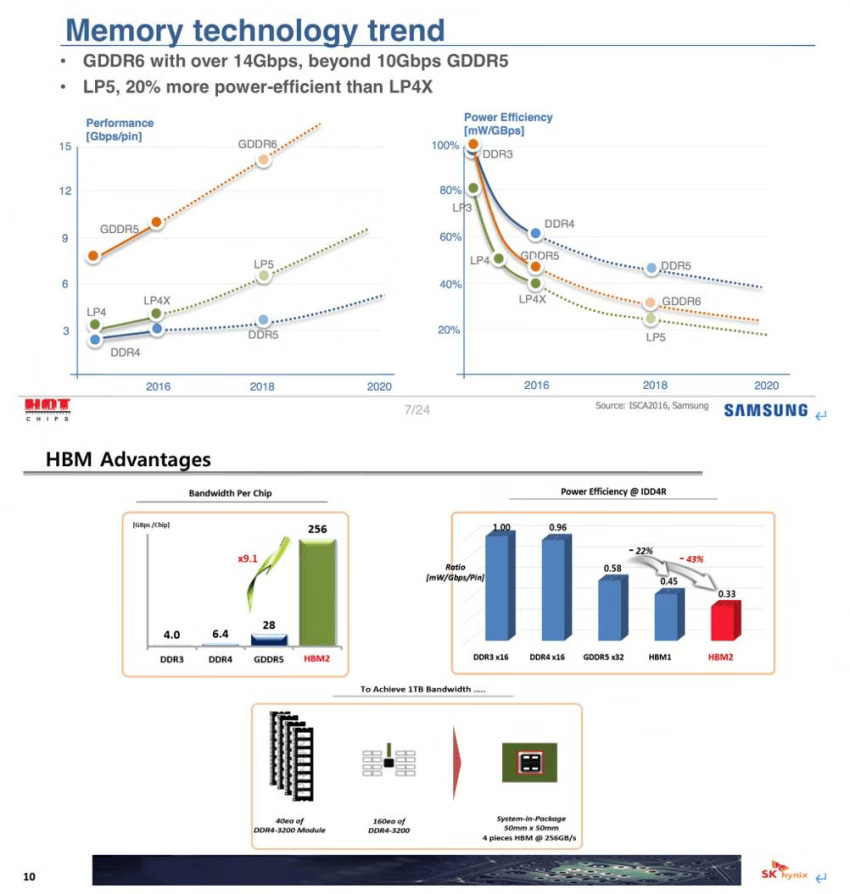

HBM (High Bandwidth Memory) is the memory technology that addresses these demands. It surpasses traditional DRAM in both bandwidth and energy efficiency, establishing itself as a crucial technology for LLMs.

1. Key Features of HBM

• Ultra-high bandwidth: With data transfer rates exceeding 1TB/s, HBM resolves bottlenecks in large-scale models.

• Energy efficiency: Its compact structure reduces data transfer distances, significantly lowering power consumption and operational costs in large-scale AI models.

2. Comparison with Traditional Memory

• GDDR and LPDDR each offer advantages in bandwidth and energy efficiency, respectively. However, HBM balances both aspects, making it the ideal choice for AI applications.

• For tasks requiring ultra-fast memory access, such as LLM inference, HBM is effectively the only viable option.

Summary: The Co-Evolution of AI and Memory

The essence of advancing AI performance lies in the evolution of memory technology. Large-scale AI models like LLMs cannot overcome their inherent limitations with computational power alone; their competitive edge increasingly depends on memory performance and data accessibility.

As such, high-speed memory technologies like HBM and the memory controllers that optimize their use are indispensable to AI semiconductor design. Moving forward, AI and memory technologies will continue to develop in tandem, with faster and more efficient memory solutions becoming the key to unlocking the next breakthroughs in AI innovation.

Now that we have enough background knowledge, let’s talk about Broadcom.

A Closer Look at Broadcom: The Key Player in HBM Controller Technology

For HBM (High Bandwidth Memory) technology to achieve its full potential, it requires more than just exceptional memory modules. The role of the HBM controller, which manages and optimizes the use of this high-performance memory, is absolutely critical. In this field, Broadcom holds a distinct and dominant position.

1. Focus on the IP Business

• Broadcom prioritizes its Intellectual Property (IP) business over manufacturing finished products.

• This approach has attracted major tech companies like Google and Microsoft, who leverage Broadcom’s technology to design their AI semiconductors.

2. Differentiation from DDR Controllers

• While traditional DDR controllers had relatively straightforward architectures, HBM controllers demand a far higher level of complexity.

• An HBM controller goes beyond simple data transfer functionality, incorporating advanced features such as 2.5D packagingto optimize performance and efficiency.

3. A Market with Few Competitors(Effectively, dominated by NVIDIA and Broadcom.)

• Designing HBM controllers requires significant expertise, with each manufacturing process necessitating new and customized designs. This meticulous effort is akin to the craftsmanship of Japanese analog artisans.

• These high barriers to entry mean that only a handful of companies, such as NVIDIA and Broadcom, are capable of competing effectively in this space.

HBM and Broadcom: The Present and Future of AI Semiconductors

HBM technology is far more than just another memory innovation in the AI semiconductor industry. It serves as a critical factor that directly impacts a chip’s performance and energy efficiency. However, to fully capitalize on HBM’s capabilities, an exceptional HBM controller is indispensable. (It is puzzling why mainstream media seldom focuses on this crucial aspect.)

Broadcom has established itself as a leader in the HBM controller market, leveraging its expertise to collaborate with major technology companies and drive advancements in AI semiconductor design.

In conclusion, Broadcom’s dominance in the HBM controller IP business positions it at the forefront of AI semiconductor innovation. This is a testament to the fact that HBM controllers are not merely supporting technologies but are fundamental to unlocking the full potential of AI chips.

Reference: https://www.facebook.com/share/19j3Q5CbvB/

This article is based on a post by Lee Dong-soo, Executive Director at Naver Cloud, with my personal thoughts added to complete the content.